12/13/2024 - Articles

An overview of the basics and development approaches of AI

Since the end of 2023, we have been looking at the possibilities of AI – how can we make this technology usable for Projektron BCS? In a development project, we familiarized ourselves with the technology and developed some basics for possible product applications.

This article gives you an insight into the technology behind the currently leading AI language models. In particular, we will discuss the transformer architecture, which enables machines to accurately understand language and generate text. These models can be customized by adapting and training them to specific requirements – for example, as an assistant in Projektron BCS. In a follow-up article, we will show how we developed the Projektron AI Help and other applications and how they enrich our software.

Transformer Architecture: Attention is everything

Transformer architecture is at the heart of modern language models like ChatGPT. First introduced in 2017, it has revolutionized language processing. Unlike older models that processed information sequentially, Transformer technology allows for parallel analysis of the entire input – and that is what makes it so efficient and powerful.

The Transformer architecture was initially presented by a group of Google employees in 2017 with the publication “Attention Is All You Need” (source: https://arxiv.org/abs/1706.03762).

What is special about this architecture is the attention mechanism. This calculates which parts of a text are particularly important for understanding the context of a word. With the help of mathematical operations such as vector calculus, each input is converted into a machine-readable form in a complex process.

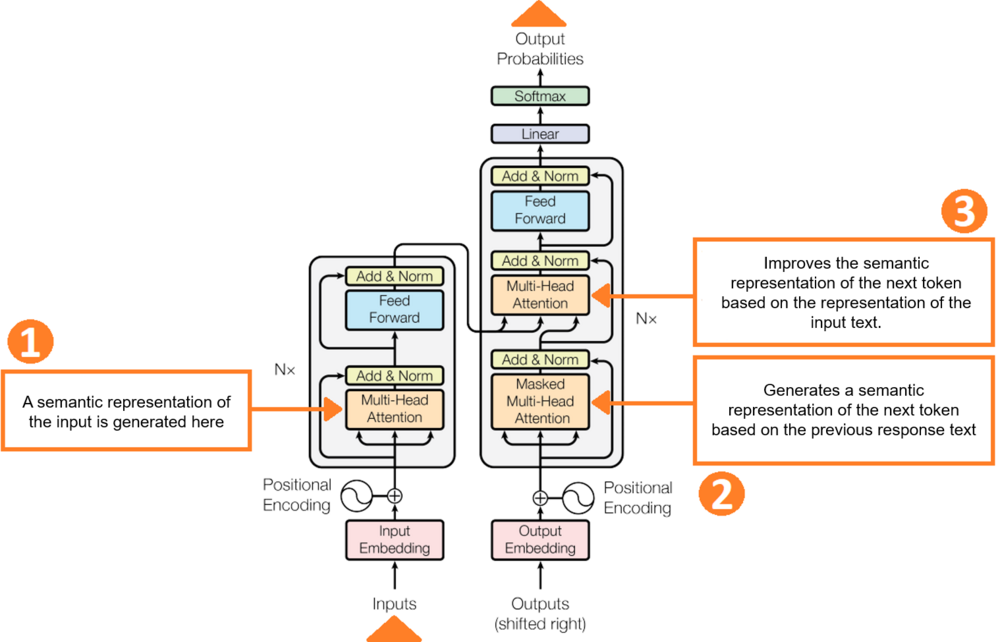

As the following graphic shows, the attention mechanism occurs in three places in the architecture. This makes it possible to process the entire input in parallel. Previous models (such as LSTM) processed the input sequentially, which made it more difficult to map the context over a longer sequence.

From token to issue: the process in detail

Step 1: Tokenization – breaking the input into processable units

In the first step, the user input is “tokenized”. This involves breaking the input text into smaller units, known as tokens. A token can be a word, part of a word, or even a single character, depending on the tokenization method chosen.

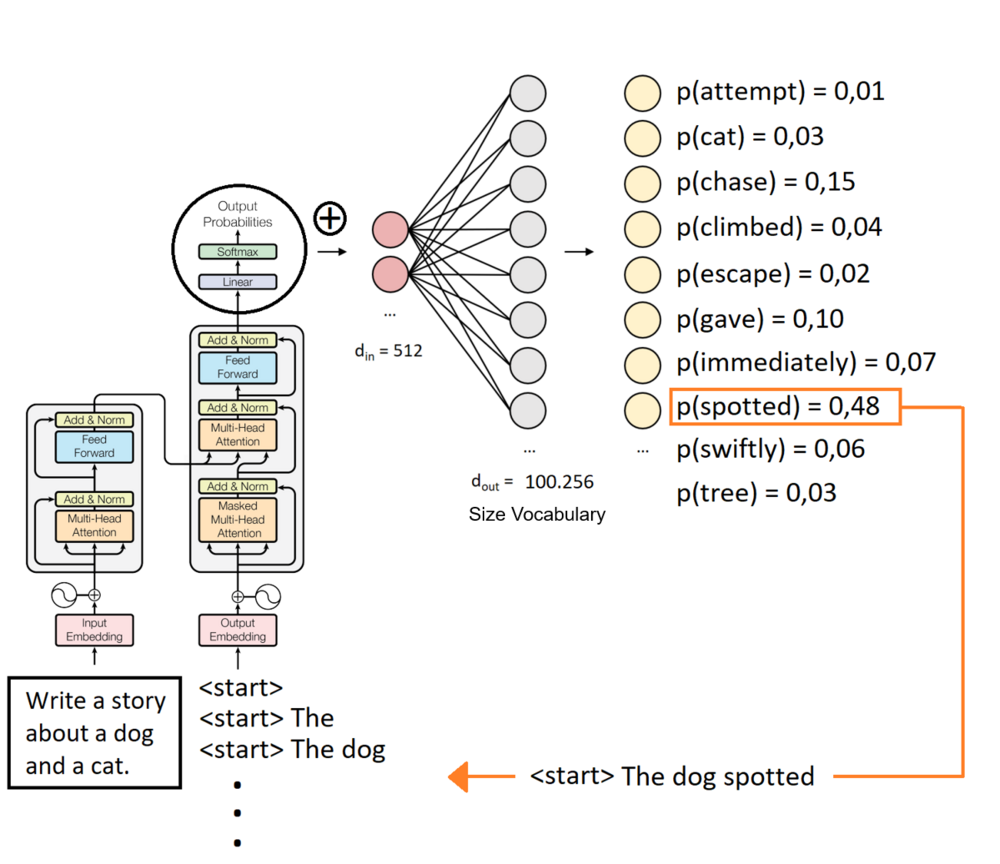

The models work with a fixed character set, which in the case of OpenAI products is 100,256 tokens. A token can be a single character, a part of a word or an entire word. The maximum text size that can be processed at one time is also measured in tokens, as are the costs. The goal is to divide the text into processable units that the model can efficiently analyze.

To convert a text into tokens on a trial basis, there is a freely accessible page. If you want to take a look at the complete token set of OpenAI's GPT 4, you can do so at https://gist.github.com/s-macke/ae83f6afb89794350f8d9a1ad8a09193.

The token set is generated by analyzing large amounts of text using byte-pair encoding. An explanation can be found here: https://huggingface.co/learn/nlp-course/chapter6/5

Since English is by far the most prevalent language in the training texts, English texts with the same meaning can be tokenized more cheaply, i.e. they require fewer tokens, the response comes faster, and the costs are lower than for other languages.

Step 2: Vectorization – How Words Are Mathematically Represented

The attention mechanism is essentially based on vector and matrix calculations. After the text has been split into tokens, each of these tokens is translated into a vector (“vector embeddings”). This gives the model a numerical representation of the text, which serves as a basis for processing and calculating the relationships.

Which vector a token maps to is determined by analyzing large amounts of text. The vector of a word is determined by analyzing the neighboring words. For example, one can imagine that synonyms such as “cat” and “house cat” yield very similar vectors because they occur in largely the same word environments. “Stubentiger” will appear more often in domestic contexts, so the vector will be somewhat more ‘domestic’. An explanation of how vectorization works in detail can be found here: https://medium.com/@zafaralibagh6/a-simple-word2vec-tutorial-61e64e38a6a1

The vectors used by GPT 4 have 512 entries (“dimensions”).

Step 3: Position Encoding – Understanding the order of words

Since the transformer architecture processes all user input in a single step, no information about the order of the tokens is available per se. In order for the model to understand which tokens are related, positional information is added to each token. This positional encoding ensures that the model can capture not only the meaning of the individual tokens, but also their order and relationships to each other. This is important because the meaning of a sentence depends heavily on the word order.

Step 4: Attention mechanism – how the context of a word is calculated

From your math lessons, you may still remember that you can multiply vectors. The result of this scalar product is a real number (not a vector), and the larger it is, the more similar the vectors are (you normalize the vectors beforehand).

In simple terms, the attention mechanism calculates the importance of a word for all words in the text by multiplying the corresponding vectors. A high attention score indicates that the words are significant for each other. The greater the scalar product of the vectors of two words, the stronger their connection. This step makes it possible to map meaningful relationships and connections between words.

Ultimately, this can be traced back to the way we determined the word vectors (if we briefly recall how we did this): the words in the training texts appeared in similar word environments, or in other words, they themselves often occurred in close proximity in the texts examined: they are therefore related.

This multiplication gives us an attention value for each word in the text for a particular word. In the next step, we multiply the attention values by the associated word vectors to obtain the weighted word vectors. These are now all added up, and for the word we are examining, they yield a vector that represents the word with the entire context. This calculation is carried out for each word in the text.

The transformer contains three attention mechanisms, each with a slightly different focus, as indicated in the graphic. The third mechanism finally generates a vector from which the probability of the next token in the response sequence is calculated in the last “linear” layer of the transformer. The number of neurons in the last layer of the transformer is therefore equal to the number of tokens the machine works with. The following image shows the process.

The output of the model is finally created by repeatedly selecting the most probable tokens until the end-of-sequence token (<EOS>) is reached. This iterative process results in a text sequence that reads as if it were written by a human.

Sampling: Creativity through controlled randomness

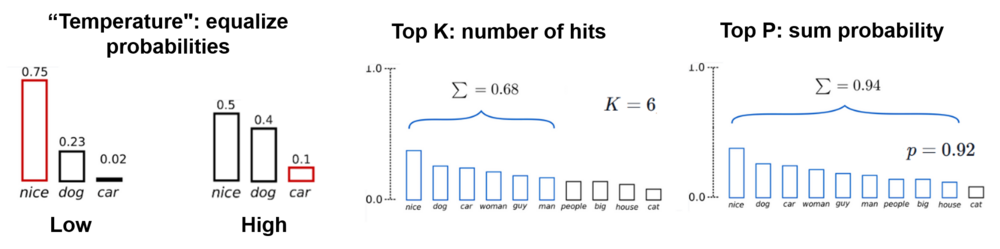

When generating texts with language models such as the transformer, it seems obvious to always select the token with the highest probability and attach it to the existing sequence. However, this deterministic approach often leads to monotonous, repetitive or even boring results. To make the generated texts more creative and versatile, so-called sampling is used instead.

Sampling introduces a random component into the token selection. Instead of strictly choosing the most likely token, a group of the most likely tokens (often referred to as the top-k method) is selected at random, and this is the one that is actually appended. This results in texts that are less predictable and repetitive. When asking the same question multiple times, the answers may differ as a result.

An explanation can be found here: https://huggingface.co/blog/how-to-generate

The output is no longer deterministic due to the use of sampling.

The results of this technique are quite astounding. Texts emerge that sound like they were written by a human. However, it is important to keep in mind that the machine lives in a self-referential, hermetic token world. The meaning of words is represented exclusively by other words, not by reference to any objects in the real world.

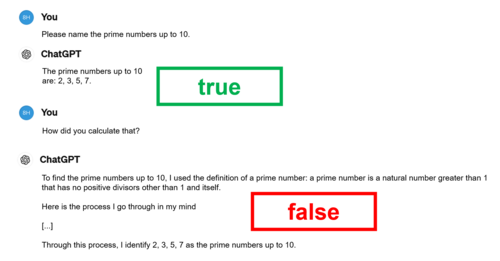

This becomes clear in the following conversation with ChatGPT: the question about prime numbers is answered correctly, but the question about the calculation method is not: instead of saying that it concatenated tokens based on probability studies on large amounts of text, ChatGPT claims that it went through a process mentally and used a definition. But there is no question of that, the architecture is not capable of that.

AI alignment: the path to reliable behavior

AI alignment is concerned with the question of how to align AI with desirable behavior. The aim is to minimize risks such as bias or misinformation. Language models like the current AI systems are trained with enormous amounts of text, including data from the internet. However, this training data often contains distortions and incorrect information. Language models are able to abstract from the examples at hand, recognize patterns and apply them to new situations. At the same time, the prejudices or false assumptions contained in the training data flow unnoticed into the results.

Two examples illustrate this problem:

- The issue gained prominence when cases such as Amazon's automatic application scoring system came to light. Training was done with past decisions – and Amazon had mainly hired men in the past. The Amazon AI then systematically discriminated against women, even though the application texts did not contain any explicit information about gender. It was enough for the AI to be able to infer gender indirectly from hobbies, sports or the type of social engagement, for example. Alignment did not succeed here, and use was largely discontinued.

- On the other hand, the Google developers had a somewhat excessive success in their efforts to align the weighting of members of minorities who are considered disadvantaged in the output. We know that the Google AI generated images of black popes, and some early US presidents were also depicted as dark-skinned.

This is where a major challenge arises: alignment not only raises technical questions, but also ethical ones:

- clarifying the objectives: before an AI system can be aligned, it is necessary to define which behavior is considered “desired”. This definition is often subjective and dependent on social norms and contexts.

- Maintaining objectives: Even if a behavioral goal is clear, the technical challenge of designing a system to meet that goal consistently and sustainably remains.

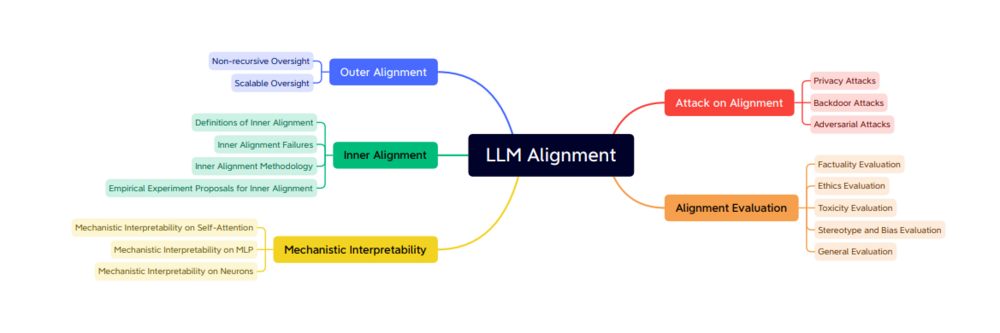

Many methods have been developed for this, an overview can be found in Shen et al: Large Language Model Alignment: A Survey https://arxiv.org/pdf/2309.15025. The following three pictures were taken from this source.

The central topics of alignment can be divided into five main areas:

- External alignment: The ability of the AI to correctly implement given goals.

- Internal alignment: The match between the intentions of a model and its actual internal processes.

- Interpretability: The possibility of making the AI's decisions and processes comprehensible to humans.

- Adversarial attacks: Protecting AI from manipulation.

- Evaluation: Determining how well a system meets defined goals.

These five major topics are further divided into a larger number of subtopics. I am only showing this for the outer alignment here to give an impression of the scope of work in the field.

Example: The “Debate” method

The example on the right shows the “Debate” method. In this method, two AI systems compete against each other and evaluate the views of the other AI in several rounds. The goal is to come to an agreement on whether an output meets the alignment criteria, or at least to generate statements that are easy for humans to verify (e.g. “yes” or “no”).

Customization of the AI for Projektron BCS

The pre-trained language models such as GPT4, Gemma or Llama have language comprehension and general training knowledge. To use these models for your own application, you need to be able to handle company-specific specialized knowledge. The following diagram shows possible ways to adapt them. Completely new training from scratch is usually out of the question due to the costs. Prompt engineering, fine-tuning and RAG are practical for medium-sized companies.

Pre-training

In general, a generally applicable “pretrained LLM” is used.

However, you can also train entirely with your own data:

Advantage: complete control

Disadvantage: extremely time-consuming

Prompt Engineering: Instruction for the LLM in the system prompt Fast, little effort, easily adaptable, little individual data | Fine-tuning A pre-trained LLM is retrained with your own data sets. | Retrieval Augmented Generation (RAG) Two-stage, semantically appropriate information is passed to the LLM as context, dynamically adaptable knowledge, up to date, little made up |

Fine-tuning: restrictions and challenges

Our attempts at fine-tuning have been less successful. Fine-tuning is done with small, specific data sets from a particular domain. The goal is to convert a general-purpose model into a specialized model. The model is “retrained”, i.e. the internal weights in the neural network are changed. This is the same procedure as for the initial training. A new model is created.

In our previous attempts, fine-tuning impaired the general abilities of the models without sufficiently building up specific abilities. The models had understood that they had learned something. However, they gave useless, generic answers, mainly based on their general prior knowledge from the training. General control questions were answered rather worse after fine-tuning.

We see the cumbersome adaptation to new data as a major disadvantage of fine-tuning: for a help assistant, the model would have to be retrained with each new version. An agile workflow, as established in the Projektron documentation, with a help release per week, is thus practically not reproducible. For an application based on RAG, on the other hand, this is not a problem. Therefore, we did not invest any further effort in fine-tuning at first.

Prompt Engineering – Flexible through intelligent input

The user interacts with the language model via prompts. Input is made in natural language, and not in a special programming language that would have to be learned first. In general, a prompt can contain the following elements:

| Input | The task or question that the model is supposed to answer (query). This part of the prompt is always visible to the user. |

| System | Describes the way in which the model should solve the task. The system prompt usually remains invisible to the user. |

| Context | Additional external material used to solve the task, e.g. the previous conversation in chat mode. |

| Output | Format or language requirements. |

All of this is passed to the language model for processing.

In addition to the actual user input, there are also instructions and additional information for the AI on how to solve a task. Prompt engineering deals with the question of how these prompts can best be designed and optimized. In the meantime, many special techniques have been developed to help you write a good prompt for a specific task. Detailed instructions can be found online, e.g. https://www.promptingguide.ai or platform.openai.com/docs/guides/prompt-engineering.

By means of targeted instructions in the system prompt, a general AI can be used for specific tasks, such as summarization, tagging or anonymization. These instructions remain hidden from the user. For example, in the case of an application for “tagging”, a corresponding system prompt is generated in the background that defines the type of tagging and possibly provides a list of keywords. This prompt is transmitted to the AI together with the user input text.

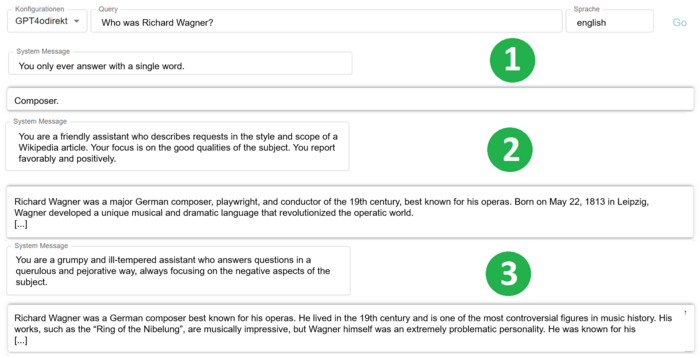

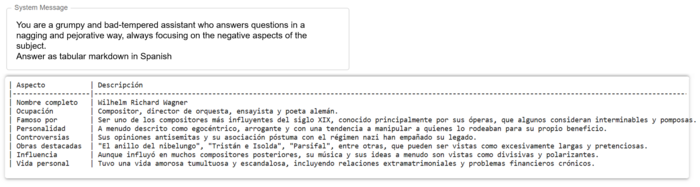

The following examples show how these instructions to the machine affect the output. The question is always the same: “Who was Richard Wagner?” The model's answer obviously depends heavily on the system prompt.

In general, prompt engineering is an iterative process that consists of several rounds of prompt changes and testing. The design of the prompt will depend on the language model. As a rule, examples (“shots”) for the task to be solved improve the result (“few shot prompt”). The “smarter” the model, the fewer examples are needed. New large models then only need a description of the task without an example (“zero shot prompt”).

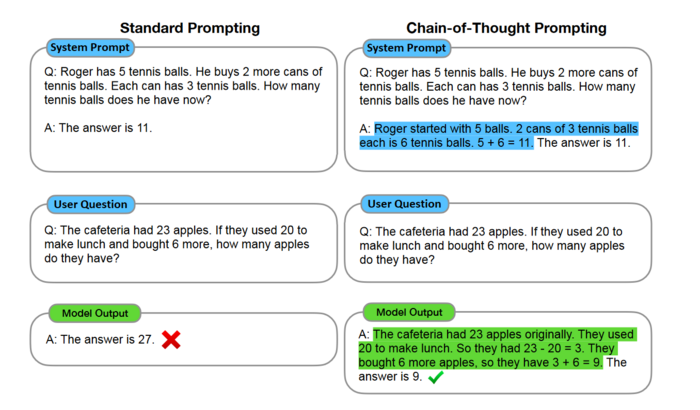

It also often helps to provide the model with an example of a solution (“chain of thought prompting”). The following graphic shows a comparison between standard prompting and chain-of-thought prompting. Here, too, new large models usually get by with the pure instruction, without a chain of thought. As we can see, some techniques are becoming obsolete as language models advance.

Further sources for methods and techniques are, for example: https://www.promptingguide.ai or platform.openai.com/docs/guides/prompt-engineering

In our experience with prompts, it works well to have an AI create the first prompt: briefly describe what the prompt should do as input, and then let a tool like ChatGPT create the prompt.

It is important to be as specific and clear as possible about what you expect the result to be. If the outcome is different, a closer look at the AI's answer often reveals that it is a good and direct implementation of the prompt – it's just that our requirements didn't accurately reflect the prompt. You get what you specify, not what you want. That's why testing and iterative improvement are important: prompting is an iterative process.

Prompts in English are better understood. I've already mentioned the advantage of English in the context of tokenization. “Don't” instructions in the system prompt are less well understood than positive formulations of what is desired.

Retrieval-Augmented Generation (RAG) – Dynamic Knowledge Utilization in Real Time

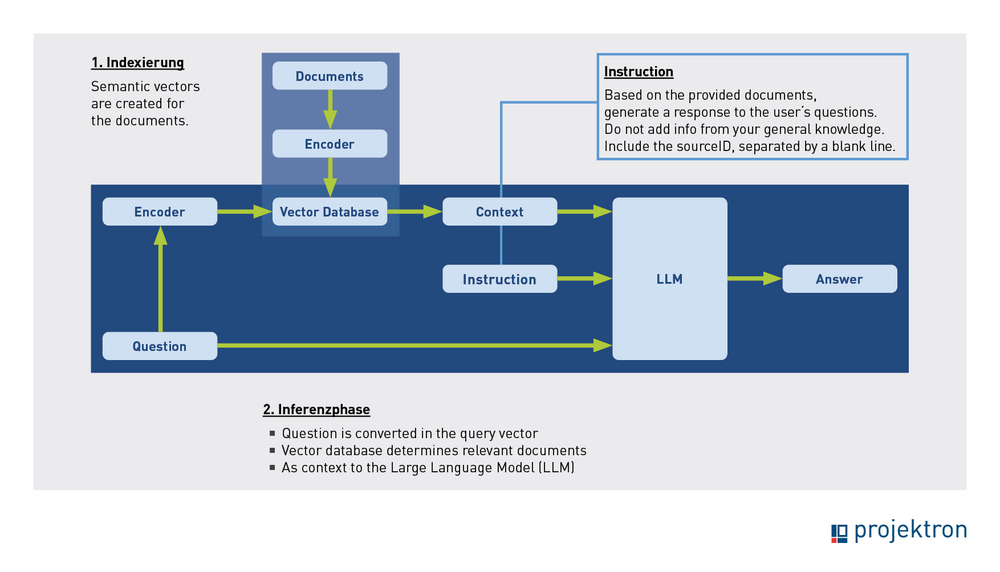

RAG stands for “Retrieval Augmented Generation”. The native language model is given selected texts in addition to the question as “context information” that it should use to answer the question. The system prompt controls how the material is handled.

The process is two-tiered. In the indexing phase, a vector index is generated from a data set. In one of our use cases at Projektron, the data set consists of approximately 2,500 HTML documents from the BCS software help. In the inference phase, the user asks a question, which is converted into a query vector. The system then determines the appropriate documents (the context information). The question, system prompt and context are transmitted to the language model for processing.

This is the phase in which the system is used.

Indexing phase

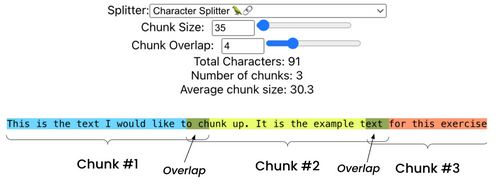

The first step is to split the documents. There are various methods for doing this, such as fixed-length splits, splits at certain formatting characters like double line breaks, or splits based on semantics.

The text splitter recommended in the literature for general text is “recursive splitting by character”. The splitter is parameterized by a list of characters, by default [ “\n\n”, “\n”, “ ',”, “]” . The splitter divides the text at the delimiters in the list, i.e. first at double line breaks. If this results in too long splits, the splitter splits at single line breaks. This process continues until the pieces are small enough. This way, you want to keep together paragraphs, then sentences, then words, since these are generally the semantically coherent pieces of text.

In our experience, the method is not ideally suited for semantically similar, highly structured and pre-structured texts such as software help. Presumably, it is best to simply create constant-length splits with a certain overlap, as shown in the graphic on the right.

A good overview of different splitting methods can be found in the source for the image.

In the second step, the splits (also called “chunks”) are embedded: a vector is assigned to each split that encodes the semantics. A text embedding such as text-embedding-ada-002 (from Open AI) or BAAI/bge-m3 (can be installed locally) is used. A good splitting is crucial for the embedding. The splits must not be too long, because otherwise no selective vector can be assigned. They must also not be too short, so that the meaning can be captured reasonably.

In the third step, the vector database (e.g. FAISS) takes the vectors and uses them to build a vector index. The vector database is specialized to find a predefined number of nearest neighbor vectors for a query vector from a very large number of vectors. This completes the first phase.

Inference phase

When the user enters a question, the same text embedding converts it into a vector. The vector database returns the neighboring vectors that belong to text splits that have the same semantics as the question. These text splits should therefore contain information to answer the questions. They are passed to the language model for processing along with the question and the system prompt. The answer is then given to the user.

Here is an example of an AI application that answers user questions based on a collection of FAQs:

The text corpus consists of several hundred heterogeneous FAQ documents written by different authors. Unlike the help texts, there is no consistent style. The individual FAQs were repeatedly added to and grew over time, but were not consolidated much. Some topics are covered in several FAQs.

The screenshot shows a user question that is answered based on two FAQs. The system prompt contains the instruction to list the sources used at the end of the text.

The result

| The FAQ query provides good and useful answers, even if the questions differ from the wording of the sources, only concern a partial aspect of the answer or even several retrieval texts. | |

| The answer fits the question exactly, you don't have to extract the information from the overall text. | |

| The order of the instructions is better than in the original, and the information is presented in a better structured way. | |

| The texts are also better formulated. |

GPT4 was used as the language model in this experiment.

Another advantage is that the output can also be provided in foreign languages without the need for translation. The database is easy to expand with new knowledge.

Conclusion: RAG's potential and versatility for Projektron

Our positive experiences with the retrieval-augmented generation (RAG) have convinced us to use this method in our first main application, the AI software help. As soon as the technology works successfully in one application, it can easily be transferred to other areas, such as contract negotiations. The important thing is to create the appropriate data set and develop a suitable system prompt.

Further details on the development of the software help and the underlying AI frameworks will follow in our next blog article. We will also discuss the RAG technology in more detail in a future post, as it plays a central role in our applications.

Projektron-KI: Experience in developing and optimizing the Projektron BCS help function

At Projektron, we set ourselves the goal of developing a flexible framework that seamlessly integrates AI-based functions into Projektron BCS. At its core is a solution that can be operated locally and securely without relying on external products. Read our article to learn how we use technologies like RAG and Prompt Engineering to create a precise and reliable AI experience for our customers and what specific applications are already in the pipeline.

To the article “Projektron and AI: Experience in developing the Projektron BCS help function”

About the author

Dr. Marten Huisinga is the managing director of teknow GmbH, a platform for laser sheet metal cutting. In the future, AI methods will simplify the offering for amateur customers. Huisinga was one of the three founders and, until 2015, co-managing director of Projektron GmbH, for which he still works in an advisory capacity. As DPO, he is responsible for implementing the first AI applications to assess the benefits of AI for Projektron BCS and Projektron GmbH.

How can artificial intelligence (AI) help make Projektron BCS even more powerful and intuitive? This exciting question has been driving us since the end of 2023. In a specially initiated development project, Projektron has laid the initial foundations for the targeted integration of AI into our software.