12/16/2024 - Articles

Projektron and AI: experiences in developing and optimizing the Projektron BCS help function

In a previous blog post, we described general properties of language models and techniques for creating an application from them. This was followed by descriptions of some of our experiments with RAG, prompt engineering and fine-tuning. The preliminary tests with prompt engineering and RAG looked promising. After the end of our “research phase”, we considered what a productive application might look like and what tasks the AI could solve in the context of Projektron BCS.

General requirements for our AI solution

At Projektron, especially for our Projektron BCS software, we didn't want to create point solutions, but rather develop a flexible framework that works as generically as possible. This AI assistant should be built from individual applications that determine which task should be solved with which resources and how. It should be possible to link the applications (AI workflows).

As a result of the preliminary research and tests completed in the summer of 2024, we will focus on RAG and Prompt Engineering for the techniques. We limit ourselves to using the language comprehension of the models and avoid resorting to training knowledge. This should prevent hallucinations.

The framework should be designed in such a way that the individual components such as the language model, embedding model or vector database can be easily exchanged. We want to remain independent of certain products due to the rapid and unforeseeable development. It is important that the framework can be operated completely locally in order to meet all requirements for data security and data protection.

In our view, a high quality of answers is crucial for success. The answers should be precise and accurate. If a question cannot be answered based on the context material, the AI should report this and not try to make something up (hallucinations). The traceability of the answers is an important quality criterion. Wherever possible, the source of the information should be linked in the answer so that it can be checked with a single click.

Since the development of AI applications is trial and test-heavy, simplifications in the learning loops are important. One focus is on a comprehensive and easy-to-read logging of the AI actions. This is essential to be able to investigate unexpected results and to find the point that needs to be improved. User feedback is recorded and evaluated.

In 2025, the framework is to be delivered to customers as part of Projektron BCS. SaaS customers are already receiving answers to help questions free of charge after updating to version 24.4. Customers with local BCS installations will receive instructions on how to use this first productive service by February 2025 at the latest.

It is planned that Projektron will deliver a basic set of ready-made applications in the course of 2025. In a second step, the customer can also create their own “applications”. The technology should therefore also be suitable for heterogeneous source data.

Exemplary applications

Application | Status | |

|---|---|---|

| 1. | Software help / FAQ: Answer to the exact user question | productive |

| 2. | Summarize ticket: The problem description, which may be spread across many comments from different people, should be summarized. | productive |

| 3. | Suggested solutions for new tickets: Search for similar tickets, generate suggested responses, improve suggested responses in chat mode. | in testing |

| 4. | Summaries of other lists: The entries in the sales history for customers or individuals can be summarized, as can other lists, such as the project history log. | 2nd quarter 2026 |

| 5. | Queries about AI agents, tools & MCP: In the future, user queries will be sent to a central AI agent that uses “tools” to answer them. The AI software help is one such tool, and the various BCS functions are also tools. These are called up via the MCP interface. If the user asks how to create a vacation date, the AI agent responds based on the documentation. If the user asks how many vacation days they have left this year, the AI agent queries the data directly from BCS via MCP. | in development, around the end of 2026 |

| 6. | Language versions: Based on a monolingual data set, many output languages are possible. | productive |

| 7. | Local AI framework: In order to be able to operate the AI functions completely locally with high security requirements, the customer must install the BCS AI framework on their premises. Additional components such as the vector database, the embedding model, and the local language models (Gemma, Llama, qwen, etc.) are also required. We are working on packaging that makes this system environment manageable, including documentation and training. | in development, around mid-2026 |

| 8. | Actions via AI agents, tools & MCP:

| in development, around mid-2026 |

Architecture

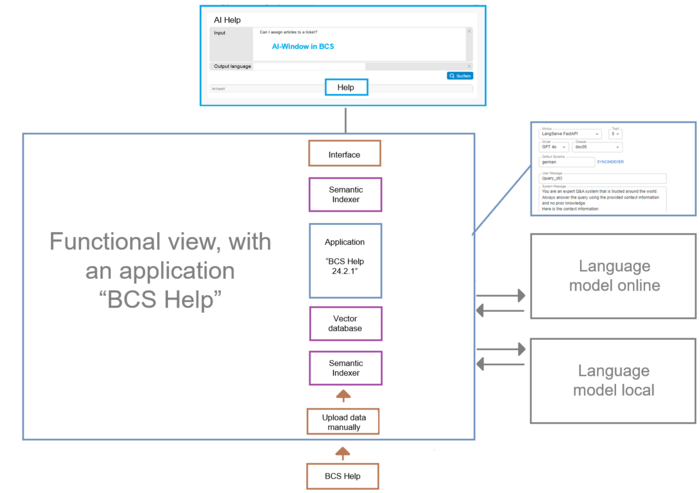

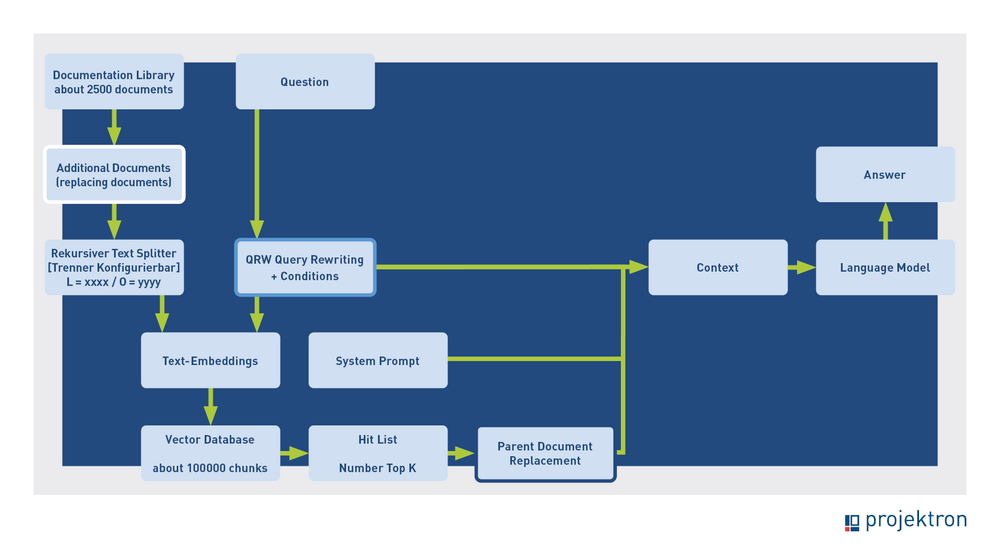

The following graphic shows the functional view of the framework. The software help is entered as the first application. Interfaces exist to data sources, language models, the display of results in Projektron BCS and to the framework administrator via a user interface (UI). The administrator defines the applications via the UI. The system prompt is the core element, which determines what the application should do. In addition, the system prompt defines the language model (internal/external) and can enter parameters. If it is a RAG application, as with Help, it can control how the vector index is generated from the data set, for example, the type and size of the text splits, or how many hits are returned and how highly these must be rated as a minimum.

The framework is addressed via an interface from Projektron BCS. When a user enters a question in the AI help window, a request is sent to the framework containing the relevant application as a parameter. Depending on the task to be completed (help, summarize ticket), various applications can be requested via the interface.

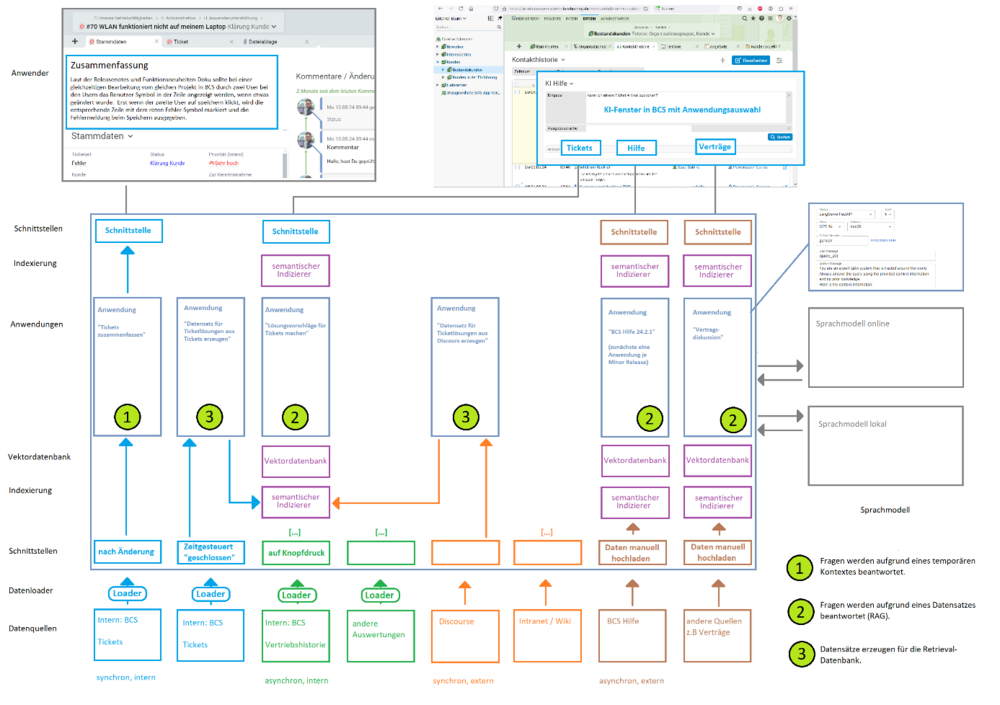

The framework can contain a large number of applications, as shown in the following graphic. Applications can be linked. For example, a data record consisting of anonymized summaries can be generated from the closed tickets with a local model. This data record is then available for answering newly incoming tickets.

In a later version, the customer can define their own individual applications that do not have to have anything to do with BCS. In the picture, this is shown on the far right as an example, “Contracts”. This provides support for employees who often have to negotiate contracts or explain them, as is the case in a software company where a license agreement is concluded with each new customer. Most of these questions have already been answered at some point in the past. An AI application can draw on this experience to speed up the processing of new cases. The data set consists of documents, each containing a contract clause, the customer question previously asked about it, and the decision made. This can be done simply using a collection of.txt files. These are processed like the help documents, a vector index is created that can then be queried.

In the same way, a customer can use AI to make company-specific process instructions, security guidelines and similar data collections searchable.

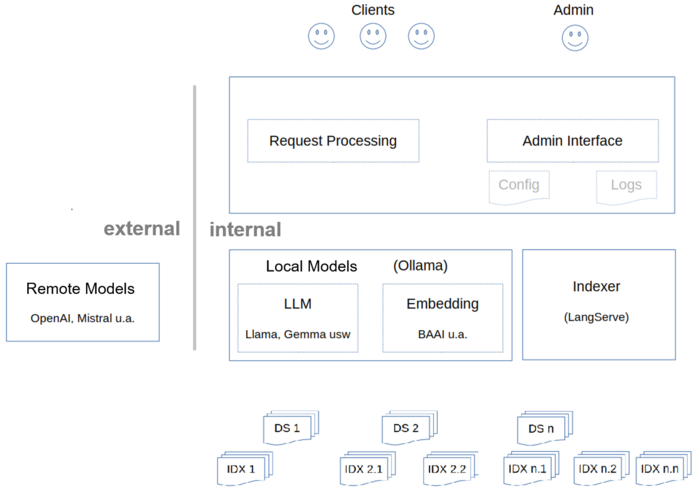

The following graphic shows the technical components. In principle, these are all interchangeable so that we can react to rapid developments in the field that are difficult to predict. The server-like components such as Ollama and LangServe are more deeply anchored, they have a standard-like status and are also widely used in industry (as of December 2024). We selected components such as text embedding through testing; more on this later. The language models can be replaced with a simple configuration entry.

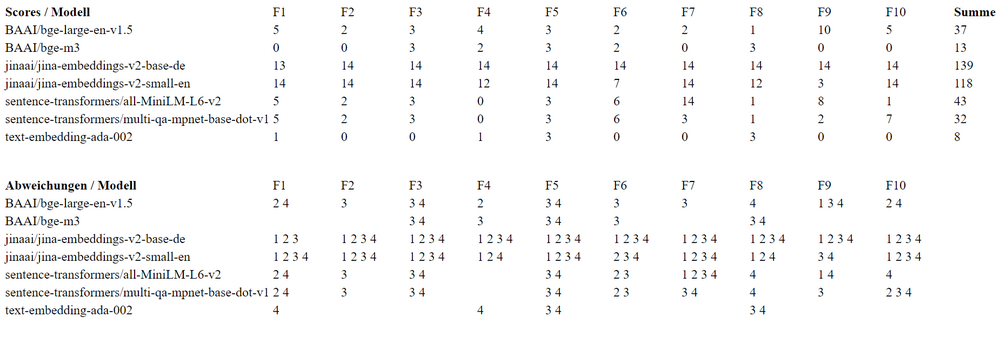

To select a local embedding model, we ran a comparative test with various products. We used OpenAIs text-embedding-ada as a benchmark, but this cannot be operated locally. The test was based on a data set of approx. 100 texts and 10 questions, each of which was asked specifically about a text, so that it was clear which text should be the top hit for the question. The texts were fed into the RAG solution from OpenAI, the questions were asked, and the 4 hits (i.e. TopK=4) were recorded. OpenAI was able to answer the questions well, with the expected documents coming in first. We compared with 6 local embedding models and the text-embedding-ada-002, which is also available individually but only online. The 10 questions were asked, and the 4 hits in each case were compared with the benchmark. For a deviation at position 1, there were 7 points, then 4, 2 and 1 point. We selected the local model with the lowest number of points. That was BAAI/bge-m3 from the Beijing Academy of Artificial Intelligence.

The following table shows the results in detail: the upper part shows the points for each question, and the lower part shows which texts were selected in each case. We plan to repeat the test at intervals; if there are significant improvements, we could change the embedding model. However, we would then have to re-index the entire document pool, which involves a certain amount of work.

This describes the technical considerations and aspects of the framework. Through many small tests and feedback loops, we have ensured that the basic setup works according to our ideas.

First productive application: AI assistance

The following section describes the settings, additions and configurations that we made when implementing our first productive application, the Projektron BCS AI Help Assistant. We improved the application in several test and implementation loops. The process flow with which we went into the next test loop is shown in each case.

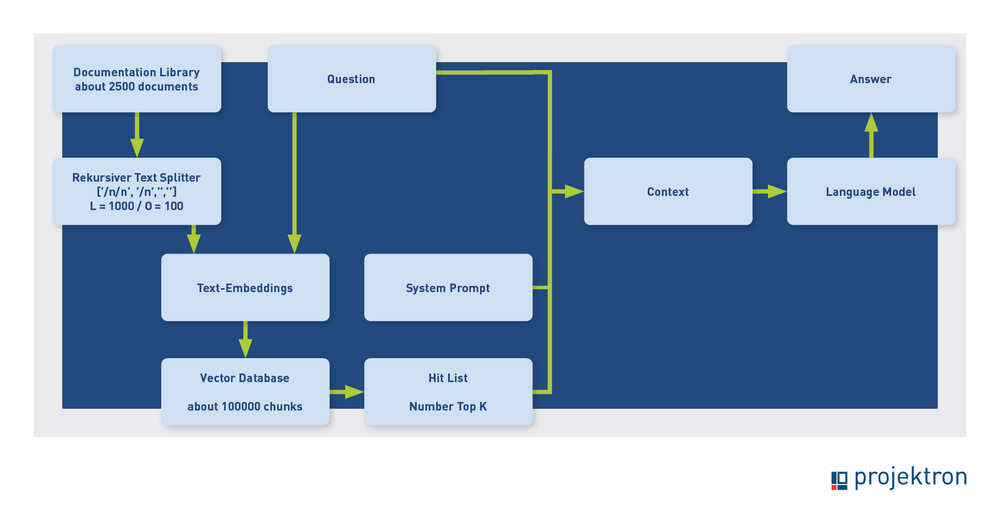

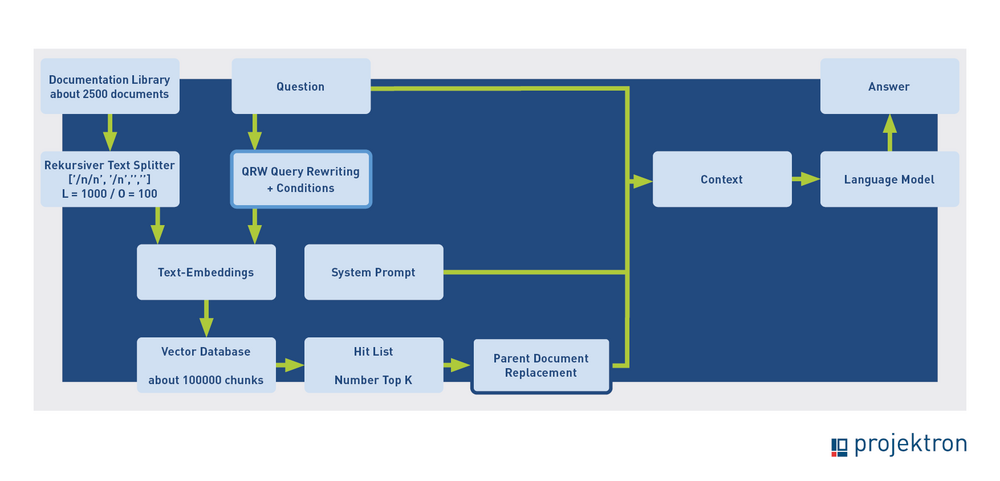

The indexing phase is shown on the left in the images, the path from the documents to the vector database. On the right, the inference phase, the path from the user question to the answer. As a reminder: the question is assigned a semantic vector by the text embedding, then the database is searched for similar vectors – whose associated texts thus deal with the same topic as the question. The hits are passed as context to the language model, together with the question and the instruction: “Answer the question using the texts found”.

Our first service for the Projektron BCS Help was in line with the normal RAG scheme. The process flow is shown in the following image.

How do you find out if this setup delivers good results? To do that, you first need good test data. These should be validated question-answer pairs so that you can see if the AI can reproduce the given answer to the question. This data was available in good quality at Projektron, since help requests are often submitted via the Projektron support server. Our test data is therefore solved help tickets from the support that have been accepted by the customer.

We expected that these questions would be one level too difficult for the AI on average, so perhaps about a quarter could be answered well because only the trained project managers and administrators have support access. These people are familiar with the system and the help and should only have to pay for questions that they cannot solve themselves with the help of the documentation. This level of difficulty of the test data set seemed advantageous to us, as there is enough room for optimization. Another advantage of the data set is that the test is realistic because we can solve real customer problems (again).

The documentation department, as the experts for the help, tested the AI functions. The feedback was summarized, usually in Microsoft Word logs with screenshots and observations. Then the incorrect answers found were examined, the reasons determined and considered, and ways to improve the process were considered. The necessary changes were implemented and tested, followed by a new round of evaluation with the documentation department, which yielded the current result.

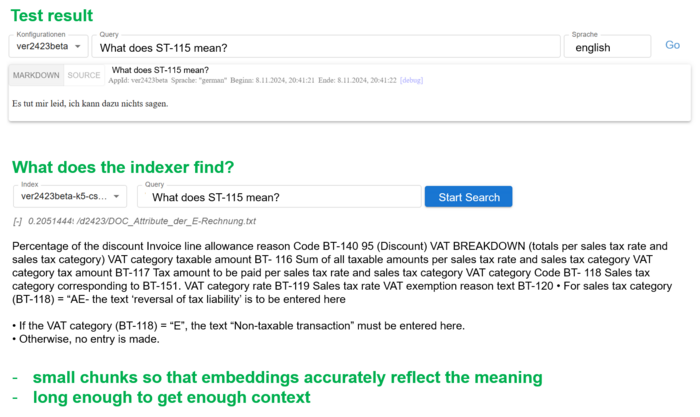

The following example of a test result from the first round led to a process change. The question “What does BT-115 mean?” could not be answered, although it is described in the documentation. The BT numbers mean “Business Terms”; they identify the fields in the electronic invoice. The question is difficult because it contains no context. If you expand the question slightly to “What does BT-115 mean in electronic invoices”, the correct answer comes up.

However, it is also possible to answer the out-of-context question correctly. An analysis of the hits from the vector database showed that a text split from the correct help document for electronic invoices was found, but not the one in which BT 115 occurred. With this information, the language model cannot answer the question correctly.

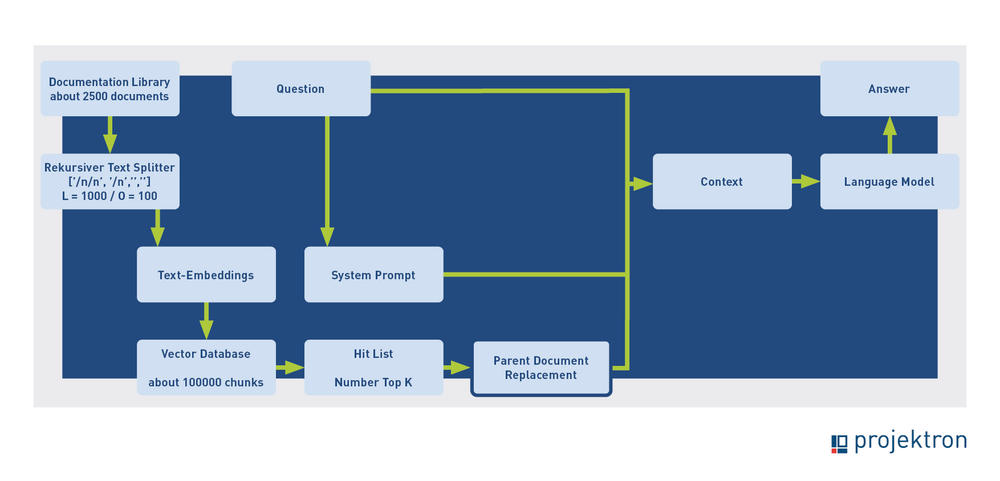

We then implemented a function that, when activated, replaces each split with the complete document, which is then passed to the language model (“parent document retrieval”). With this function, the out-of-context question for BT-115 is answered correctly.

There is a conflict of objectives when determining the optimal size of the text splits. On the one hand, the splits must be large enough to provide sufficient context for answering the question, but on the other hand, they must be small enough to contain only one topic, if possible, so that the vector can accurately reflect the meaning. With “Parent Document Retrieval”, this conflict of objectives can be partially resolved.

The second version of the process flow, with parent document retrieval, was as follows.

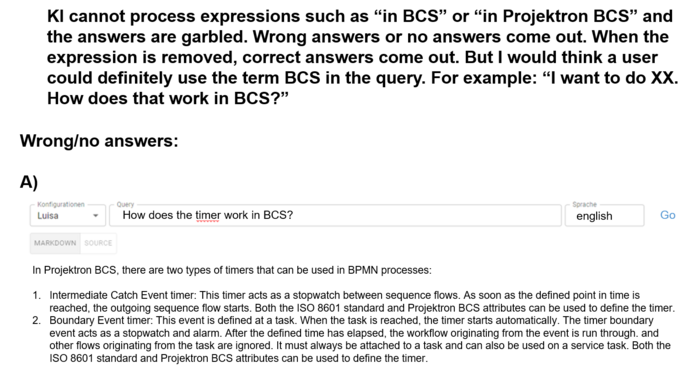

The analysis showed that the problem here also stems from the retrieval stage. If “BCS” or “Projektron” are included in the question, the vector search often returns very short pieces of text in which “BCS” or “Projektron” appear in particular. Often, none of the five hits has anything to do with the rest of the question. Even if you replace the split with the entire document, the language model does not come up with a correct answer with this contextual information.

If you were to search in a general text corpus, it would be a good strategy to give high weighting to very specific terms such as “BCS” or “Projektron” in order to find the presumably few documents that deal with these topics. In our particular application, however, every text in the corpus is Projektron BCS, so paying a lot of attention to these terms is misleading. We therefore rewrite the question if it contains certain terms (here the filter is: “BCS” or “Projektron”). If the terms are not included, the question is used unchanged, otherwise the terms are removed by an AI application. The question should otherwise be changed as little as possible. This is an initial example of the linking of AI applications. The process with Query Rewriting (QRW) now looks like this:

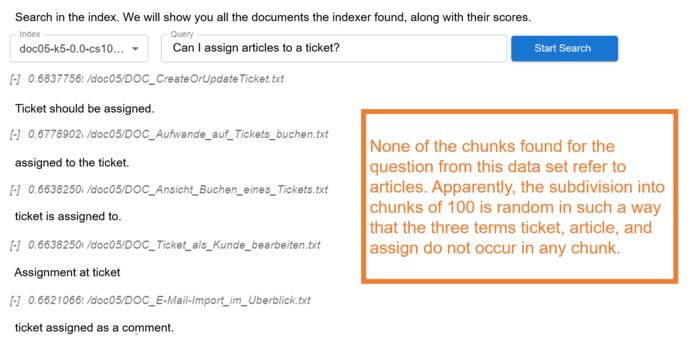

This takes us to a new round of testing. The next find relates to the retrieval step again. We tested whether shorter splits, because they can be semantically vectorized more precisely, together with parent document retrieval, deliver better results. Surprisingly, this was not necessarily the case, as the example shows. With larger split lengths (250 or 1,000 characters), the test question in the example was answered correctly, but not with a length of 100 characters. Apparently, there is no split that contains all three terms in the question: “ticket”, “article”, “assign”. The hits mostly revolve around assigning emails to tickets. This contextual material does not answer the question.

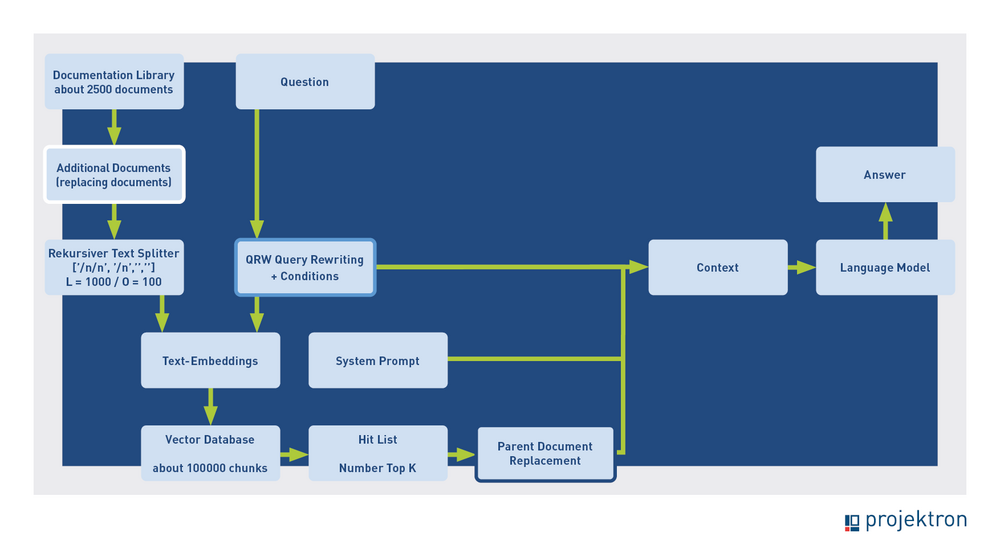

As a solution, we have implemented that the data set can be supplemented with AI-generated additional documents. In this case, these are short summaries containing all keywords of the respective help page. The summaries are not split any further. If the indexer finds one of these summaries, it is replaced by the complete help page, as with the text splits, which is then passed as context. The process then looks like this:

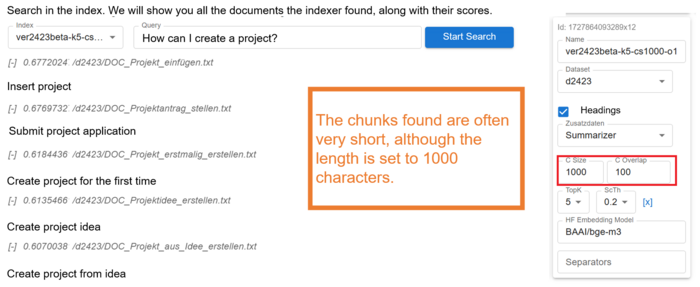

The last improvement step we would like to present also has to do with the splitting and indexing process. We have observed that the splits found by the indexer are often very short. In the standard setting of the “Recursive Text Splitter”, splits are made first at double line breaks, then at single line breaks, then at spaces, and finally within words. Since the help documents are highly structured, with many double line breaks, many splits are shorter than the “chunk size”.

The default setting works well for standard texts, but in our special case it provides fewer useful results. This is because the semantic search prefers these short splits. The result is often a search image like the one shown below. Although the split length is set to 1,000, the found splits are only between 16 and 26 characters long.

We suspect that (similar to the terms BCS and Projektron) this is why splits are often determined that contain a highly rated term. These do not necessarily have to be the splits that lead to the optimal original document after parent document replacement. We have therefore made the split parameters configurable via the framework so that you can easily set the fixed length, without having to worry about structures caused by line breaks or semantics. A large overlap ensures that the context is preserved as much as possible. According to the tests, this method seems to provide slightly better results than standard splitting in our special case. The overall process now looks like this:

This is the state with which we deliver the first productive version of the help. Now we are waiting for the “reality check”: experiences with the first real customers.

It can be clearly seen from our experience report that most of the optimization work concerned the retrieval and especially the text splitter. Once the correct context has been determined, the language model also provides a suitable answer.

We used GPT-4o for the AI help because there are no data protection or data security restrictions. We also tested with local models. Gemma 2 27b (15.6 GB) delivered the best results on our previously used hardware. The test computer had difficulties with even larger models. The results with Gemma were qualitatively quite good, but not quite as good as those of GPT-4o. The performance was significantly worse, but could be improved by a more powerful computer.

Outlook

RAG and chat

Thanks to ChatGPT, many users are accustomed to a chat function. If the answer is not clear or good, you just ask again. This is probably the most promising way to further improved results, including for help. The problem with RAG is a case distinction: when the user asks the second question, the AI must assess whether it belongs to the old topic, and if so, whether the information retrieved is sufficient or whether a new search is needed. If it is a new topic, the previous context must be ignored so that it does not lead the user astray. So we are working on getting the case distinction and the follow-up process right.

Feedback

The user can already provide feedback in the help window as to whether or not they found the answer helpful. We see potential for further processes here, for example, to expand the FAQ with the information that provided the sought-after information in the case of negative feedback.

About the authors

Maik Dorl is one of the three founders and still one of the managing directors of Projektron GmbH. Since its foundation in 2001, he has shaped the strategic direction of the company and is now responsible for the areas of sales, customer care and product management. As a product manager, he is the driving force behind the integration of innovative AI applications into the project management software Projektron BCS.

Dr. Marten Huisinga is the managing director of teknow GmbH, a platform for laser sheet metal cutting. In the future, AI methods will simplify the offering for amateur customers. Huisinga was one of the three founders and, until 2015, co-managing director of Projektron GmbH, for which he still works in an advisory capacity. As DPO, he is responsible for implementing the first AI applications to assess the benefits of AI for Projektron BCS and Projektron GmbH.